Imaging and clinical features of patients with 2019 novel coronavirus SARS-CoV-2

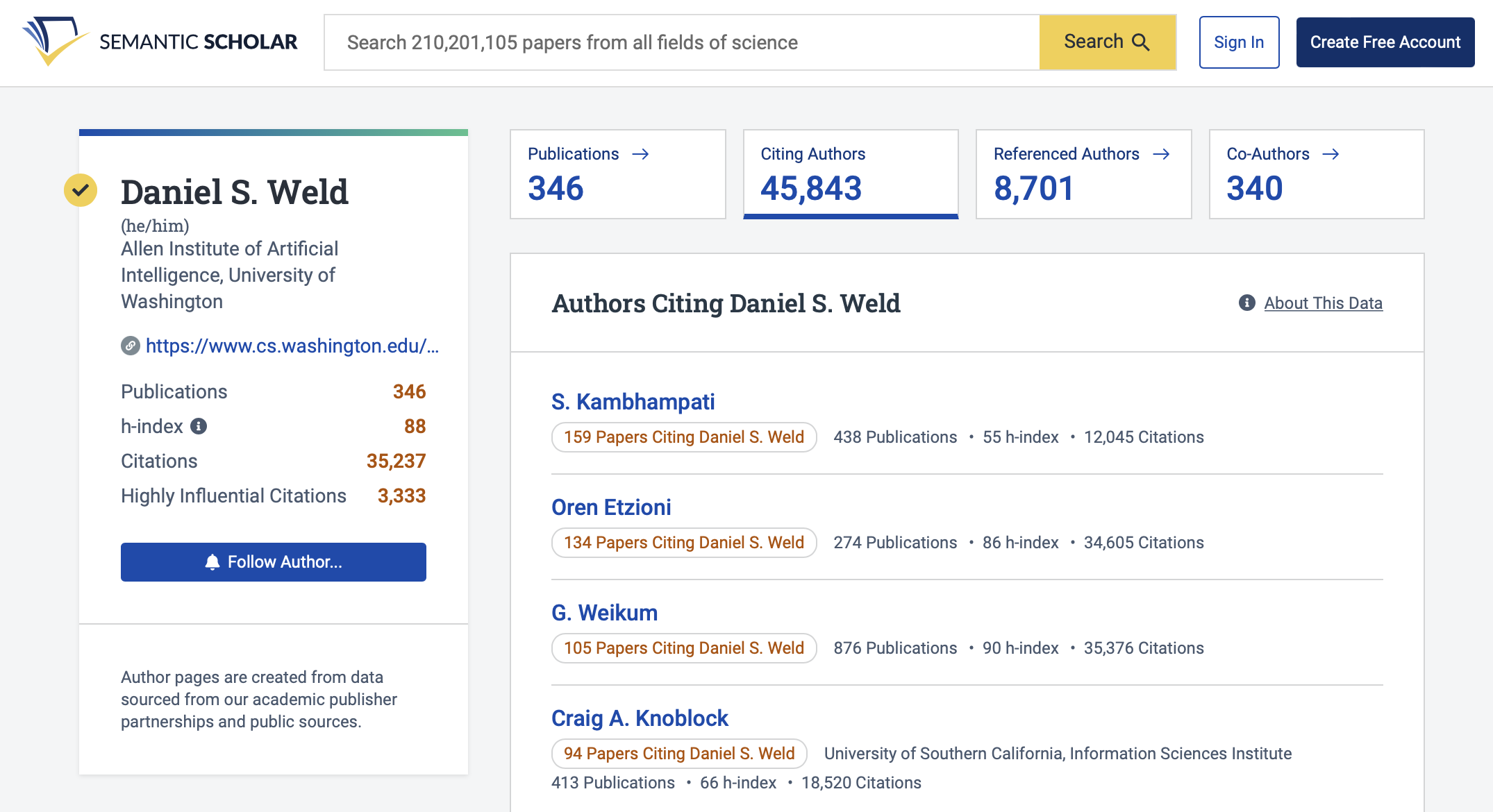

Background The pneumonia caused by the 2019 novel coronavirus (SARS-CoV-2, also called 2019-nCoV) recently break out in Wuhan, China, and was named as COVID-19. With the spread of the disease, similar cases have also been confirmed in other regions of China. We aimed to report the imaging and clinical characteristics of these patients infected with SARS-CoV-2 in Guangzhou, China. Methods All patients with laboratory-identified SARS-CoV-2 infection by real-time polymerase chain reaction (PCR) were collected between January 23, 2020, and February 4, 2020, in a designated hospital (Guangzhou Eighth People’s Hospital). This analysis included 90 patients (39 men and 51 women; median age, 50 years (age range, 18–86 years). All the included SARS-CoV-2-infected patients underwent non-contrast enhanced chest computed tomography (CT). We analyzed the clinical characteristics of the patients, as well as the distribution characteristics, pattern, morphology, and accompanying manifestations of lung lesions. In addition, after 1–6 days (mean 3.5 days), follow-up chest CT images were evaluated to assess radiological evolution. Findings The majority of infected patients had a history of exposure in Wuhan or to infected patients and mostly presented with fever and cough. More than half of the patients presented bilateral, multifocal lung lesions, with peripheral distribution, and 53 (59%) patients had more than two lobes involved. Of all included patients, COVID-19 pneumonia presented with ground glass opacities in 65 (72%), consolidation in 12 (13%), crazy paving pattern in 11 (12%), interlobular thickening in 33 (37%), adjacent pleura thickening in 50 (56%), and linear opacities combined in 55 (61%). Pleural effusion, pericardial effusion, and lymphadenopathy were uncommon findings. In addition, baseline chest CT did not show any abnormalities in 21 patients (23%), but 3 patients presented bilateral ground glass opacities on the second CT after 3–4 days. Conclusion SARS-CoV-2 infection can be confirmed based on the patient’s history, clinical manifestations, imaging characteristics, and laboratory tests. Chest CT examination plays an important role in the initial diagnosis of the novel coronavirus pneumonia. Multiple patchy ground glass opacities in bilateral multiple lobular with periphery distribution are typical chest CT imaging features of the COVID-19 pneumonia.

TLDR

SARS-CoV-2 infection can be confirmed based on the patient’s history, clinical manifestations, imaging characteristics, and laboratory tests, including chest CT imaging features of the COVID-19 pneumonia.